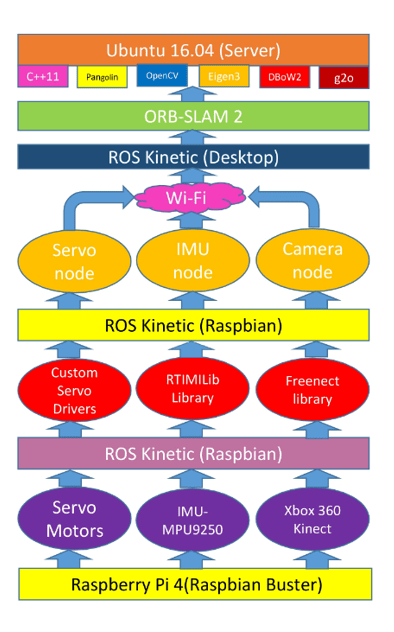

We are creating a Server-Node relationship in this project in which the laptop serves as server and robot parts are the slave. Ubuntu 16.04 is installed on the server on which ORB-SLAM 2 is installed. When the robot starts moving then the different nodes of the camera, motors, and sensor were crested in real-time. Each node has some information (message) in it like the Camera node publishes raw, uncompressed images. ROS (Robot Operating System) installed at raspberry pi 4 sends this information to ROS installed at Ubuntu 16.04. ORB-SLAM 2 uses this information to perform the functionalities of tracking, mapping, and loop closure.

Introduction to ORB-SLAM

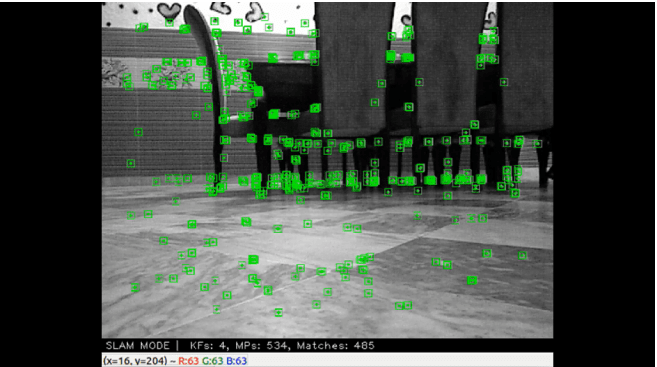

By the combination of the two features, BRIEF (Binary Robust Independent Elementary Features) and FAST (Features from Accelerated and Segments Test), this ORB (Oriented FAST and Rotated BRIEF) were developed. BRIEF is a key point descriptor and FAST is a key point detector. Based on these two features, ORB-SLAM can detect and describe features and perform the functionalities of tracking, Mapping, Loop Closure. When our robot moves in any Environment then it will do auto-tracking and it will automatically make its map and it also performs loop closure. All this is done by ORBSLAM

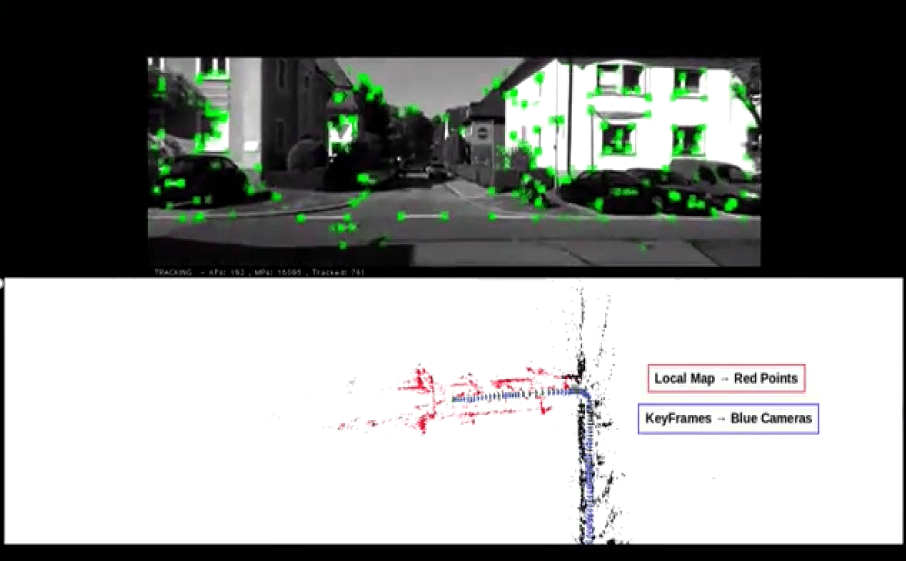

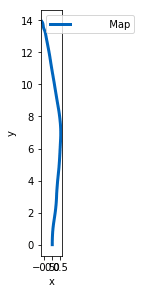

Tracking

Tracking is in charge of localization of the camera with every frame and it decides about inserting the new frame by matching the features of the new frame with the previous frame. If the robot, unfortunately, does some abrupt moment or occlusions then tracking might be lost but our robot can do re-localization by matching features of the new frame with the previous frame

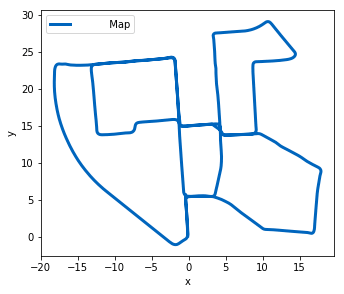

Mapping

When our robot moves on different points, it detects the key points (edges and corners) of the surrounding things and memorizes them along with measurements and it adds the node there then as it moves forward than at the next point it again detect the edges and corners of the surrounding things and update all sharp points and memorize them along with measurements and it then adds the new node there and updates the visibility graph and The local mapping thread processes new keyframes, and updates and optimizes their local neighborhood. New keyframes inserted by the tracking are stored in a queue that ideally contains only the last inserted keyframe. It transforms the keyframe into its bag of the word representation. This will return a bag of words vector which will be used later by the loop closer. These conditions must be fulfilled for the point to be retained on the map that While making the new frame, our robot must have to find more than 25 percent of points for this frame to memorize it and When our robot creates different points for map creation then if it again comes to that point then at this point, our robot must observe this new keyframe from at least three keyframes.

Loop Closure

At this stage, the bot will take the last keyframe processes and tries to detect and close the loop by computing the similarity between the bag of the words of the current keyframe and all its neighbors. If the loop closure occurs, all the previous edge points that matched with new ones are removed and new edges are updates in the visibility graph and the robot well adds the new node and memorizes it with new edge points.

Robot Design

A master-slave relationship is created for the movement of the robot. The laptop serves as a master and Robot parts will serve as slaves. Wi-Fi or Bluetooth is needed for this process of communication between masters and slaves. Wi-Fi is the best option as it provides greater bandwidth for strong communication. The robot will detect features through processing images taken by the camera. We use Xbox 360 Kinect which detect features up to a wide range even in a bad light. The communication between Server (laptop) and robot nodes is done by ROS (Robot Operating System). ROS kinetic is installed at Raspberry pi 4 which take information from the nodes and send this information to ROS installed at Ubuntu 16.04 Interfacing servo motors with raspberry pi 4 using the custom-built driver and interfacing the IMU-MPU9250 with raspberry pi 4 using RTMULib Library and the camera module with raspberry pi using V4L Driver is for making the nodes of these parts. The ROS involves the utilization of nodes. Three nodes were made on the slave side. Each node has some information (message) in it like the Camera node publishes raw, uncompressed images. So, each node publishes a message to a particular topic and these nodes communicate with ROS on the Master (Laptop) side and this ROS (Robot Operating System) at Master side, collects the information (message) publish by these nodes. The key data that will be received from the raspberry pi camera module is the RAW (uncompressed) image which helps in mapping the environment using ORBSLAM. So, for this purpose, we implement the ORBSLAM 2 at the server end. This ORBSLAM includes Pangolin, Discrete Bag of Words (DBoW2), g2o, OpenCV, Eigen 3, C++11.